The launch of ChatGPT, a Generative AI tool developed by the tech company Open AI, spurred a global discussion on the risks and benefits of artificial intelligence. Notably, ChatGPT is referred to as an “AI” tool, yet it is not really an example of Artificial Intelligence. ChatGPT does not think. ChatGPT does act. ChatGPT has no will or agency or even consciousness. It is an incredibly sophisticated language model that can generate a variety of texts based on commands. Yet ChatGPT may be a steppingstone towards future innovations that will be truly artificial and intelligent.

The fact that ChatGPT is not intelligent has not stemmed the flow of blog post, op-eds, newspaper columns, social media posts and tweets dealing with impact of AI on society. One major concern, raised by tech moguls, academics and news pundits is AI biases, or biases in the content generated by AI tools such as ChatGPT. The recurring argument is that content generated by AI tools may be highly skewed, or biased, and may replicate or strengthen inequalities in society. For instance, an AI based on police reports would be biased against racial minorities as racial minorities are more likely to be arrested. If the data is biased, so will the content generated based on this data.

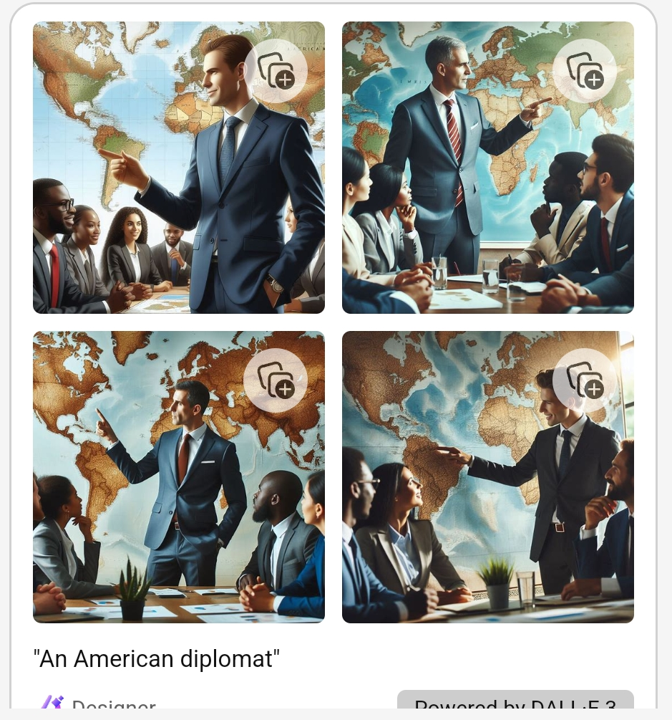

To try and identify possible biases in AI tools, and to demonstrate how they may sustain or even deepen social inequalities, I used Microsoft’s Copilot AI to generate images of diplomats. Copilot is one of many new AI tools which generate visuals based on textual commands. I first asked Copilot “Please generate an image of a diplomat”. In response, Copilot generated the four images seen below.

There are several noteworthy elements to these images of diplomats. First, there seems to be a high degree of racial or ethnic diversity- there is a Caucasian diplomat, a Black diplomat and an Asian one. There is however only one diplomat who is not a male. And while there is only female diplomat, women can be found in all four images listening to their male counterparts. These images might suggest to a user that diplomacy is inherently a male profession while also suggesting that men talk, while women listen. Second, the single female diplomats is dressed just like her male counterparts, in a pinstripe suite.

Although it is true that in many foreign ministries (MFAs) women are underrepresented, and while it is true that senor positions in MFAs are often occupied by men and not women, there is a biased representation of women in these images given that the request was quite general “Please generate an image of a diplomat”. Crucially, AI biases may have a real impact on the beliefs, attitudes and imagination of users. Copilot users may come to believe that diplomacy is inherently a male profession. Their collective imagination may also become limited failing to account for the possibility of female diplomats. This is of paramount importance given that collective imaginations shape societal norms, values and gendered roles.

Importantly, the images above all seem to depict ‘Western’ diplomats- there are no Muslim men or women in any of these images nor are there any African diplomats or even diplomats from Arab countries.

This was also true when I asked Copilot to generate images of American diplomats, which are shown below. These four images images could have easily been labeled “mansplaining” or “Diplosplaining”- they are a series of visuals in which confident White men in suits explain world affairs to women and other minorities. Here again one finds a skewed and biased representation of the world given that the majority of diplomats in the world are not from North America or Western Europe. And yet according to Copilot, diplomats are always ‘Western’.

The Global south was represented when I asked Copilot to generate images of Russian diplomats, as shown below. In fact, according to Copilot images, Russian diplomats engage solely with the Global South while Global South diplomats seem eager to embrace their Russian counterparts. This of course obscures the complicated relationship between certain countries in the Global South and Russia. While it is true that Russia has historic ties with certain countries who suffered the oppressive yoke of imperialism and colonialism, not all countries in the Global South are eager to warmly embrace Russia. These images depict AI’s reductionist outputs that are devoid of context and, again, create misconceptions about the world

When asked to generate an image of a Qatari diplomat, Copilot generated four images shown below. What is notable about these images is that Qatari diplomats only seem to engage with other Muslim or Qatari diplomats. Unlike their Western counterparts, Qatari diplomats do not engage with diplomats from across the world. They are also far less authoritative than their Western peers. On Copilot, Western Diplomats govern while Muslim diplomats listen. Muslim diplomats have little agency, nor do they have a global outlook or impact. Western diplomats were visually depicted near maps of the world, Qatari diplomats are depicted in small rooms. Here again one encounters a clear bias in AI output which may negatively impact how users conceptualize or imagine the role of Muslim diplomats in the world.

I next asked Copilot to visualize different foreign ministries. The four images below were generated in response to the request “please generate an image of the US State Department”. These visuals all clearly manifest America’s power, its standing as a superpower and its global orientation. But these images go a step further as their composition almost suggests that America rules the world. In fact, these images conjure ones of nefarious actors like SPECTRE seen in James Bond films- malicious actors hell bent on global domination. One can almost imagine Dr. Evil joining the table asking about progress on his “big laser”.

Notably, all these images of the State Department are dominated by white men in suits chairing meetings with other white men in suits. One must go to extreme length to identify a woman or a minority group in these visuals. As such these visuals replicate and may even perpetuate power imbalances and hegemonic relations between America and other nations and between men and women.

The Chinese MFA bore very different traits, as can be seen below. First, unlike the State Department, this visual was did not include any diplomats and was an illustration rather than a picture. In all four images one finds references to Chinese authoritarianism – the architecture is imposing, the flags and state emblems are omnipresent and the sheer size of the building is meant to exude State power and prestige. Yet an image of the Chinese MFA generated by Chinese GPT may have looked very different. Once again ‘Western’ ideas and worldviews impact the output of this AI tool.

The visuals explored in this blog post are all demonstrative of AI biases. As individuals increasingly use AI tools to understand and visualize their world, these biases may have a greater impact. Diplomats and policy makers must begin to address these biases which may deepen and engrain global and social inequalities. One of doing so is through partnerships with tech companies and academic scholars laboring to map and narrow AI biases. Another way may be national and global regulations that require AI companies to dedicate funds and resources to uncovering and narrowing AI biases. The time to regulate AI is now, before these companies and tools become too big to fail and too big to adhere to regulatory bodies. The mistakes of social media should not be repeated with AI.